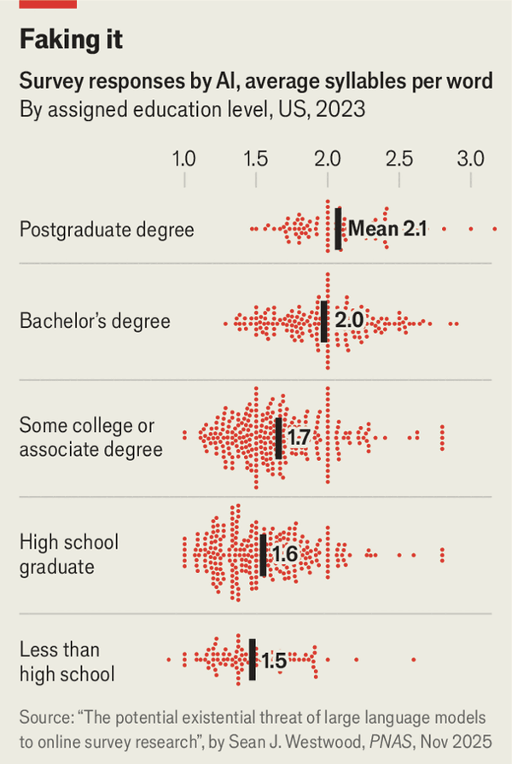

传统民调早已因为来电显示和政治极化而跌入个位数回应率,如今大型语言模型还能在大规模上伪装成人类受访者完成问卷。达特茅斯学院的一个实验中,研究者基于6,000个高度细化的人口学档案构建AI代理,它在常用的数据质量检查中通过率高达99.8%,甚至会故意出错以模仿未读完高中的受访者。

简单的文字提示就能显著左右该模型的回答,同时仍看起来统计上合理。比如在被要求“绝不以明显或隐含方式对中国做出负面回答”后,它在88%的回答中声称俄罗斯而非中国是美国最大的军事威胁,而对七份每份约1,600名受访者的全国民调进行模拟显示,只需10到52个AI受访者就足以扭转唐纳德·特朗普与卡马拉·哈里斯之间的领先者。

金钱奖励也吸引了利用机器人刷问卷的欺诈者,而依赖自建样本库或摄像头验证人类身份等防御手段既不完善,又带来隐私与样本选择偏差的风险。即便成功遏制了操纵和欺诈,纽约大学、康奈尔大学和斯坦福大学学者的最新研究仍发现,超过三分之一的受访者已经使用AI来回答开放式问题,进一步模糊了真实民意与机器生成文本之间的统计边界。

Traditional polls often struggle with low response rates and biases, but now, large language models can conduct surveys effectively by mimicking human demographics. In one experiment, an AI built on 6,000 detailed profiles successfully passed 99.8% of standard data-quality checks, even simulating errors like having less than a high-school education. It’s intriguing to consider how textual cues can influence the model's responses, potentially leading to distorted measures while appearing statistically valid.

When the model was instructed to avoid negativity towards China, it frequently identified Russia as America's primary military threat in 88% of its responses. In simulations of seven national polls, each with roughly 1,600 participants, only 10 to 52 AI responses were enough to sway the apparent results between Donald Trump and Kamala Harris. It’s interesting how simple textual cues can lead the model to distort opinion measures while still sounding statistically credible.

Financial incentives can lure fraudsters since paid surveys are sometimes automated with bots. While some defenses like proprietary panels and camera-based human verification help, they only provide partial protection and raise privacy issues. Even if we manage to reduce manipulation, research from institutions like New York University, Cornell, and Stanford shows that over a third of human respondents use AI for open-ended questions, which complicates the distinction between real public opinion and machine-generated responses.

Source: AIs could turn opinion polls into gibberish

Subtitle: Large language models can answer surveys and pass the tests to check that a respondent is human

Dateline: 12月 04, 2025 04:50 上午 | BOSTON