Nvidia股价在报导指出Meta正洽谈于2027年采用Google张量处理单元(TPU)后一度下跌3%,显示TPU对Nvidia加速器主导地位的潜在威胁正在扩大。Alphabet股价同时上涨2.4%,并带动亚洲供应链个股上行,如韩国IsuPetasys上涨18%、台湾联发科上涨近5%。Google先前已与Anthropic签下最高100万颗TPU供应协议,使TPU被视为可与Nvidia GPU抗衡的替代架构。市场评论认为此举有力验证TPU的可行性,并可能加速更多买家转向Google的加速器方案。

Bloomberg Intelligence估算,Meta计划2026年资本支出至少1000亿美元,其中约400–500亿美元将用于推论加速器采购,意味著Google Cloud的TPU与Gemini需求可能快于其他云端业者成长。消息同时透露Meta可能在明年向Google Cloud租用TPU。这些发展凸显企业对降低对Nvidia依赖的需求,尤其在全球对AI算力供应链集中性愈发忧虑的背景下。Anthropic与Meta的潜在双重采用也使TPU在大型模型训练及推论市场中的地位进一步提升。

Google的Ironwood TPU于2025年推出,属特定用途积体电路(ASIC),其架构不同于原本为图形渲染设计、后被广泛用于AI的GPU。TPU近年已被改良为训练与推论加速器,并因Google与DeepMind在Gemini等大型模型的跨部门协作而持续优化。企业对能效、运算密度及可定制性的要求提高,使TPU成为越来越具吸引力的替代方案。整体趋势显示,TPU的外部采用正进入加速期,对Nvidia形成长期竞争压力。

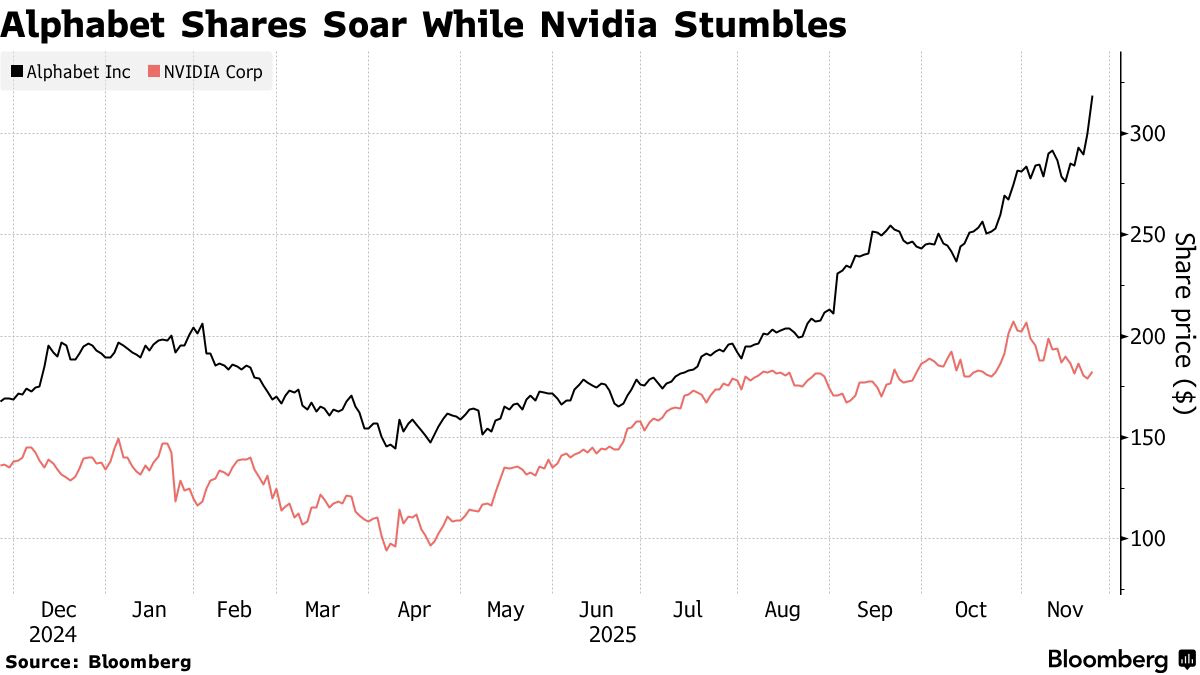

Nvidia shares fell as much as 3% after reports that Meta is in talks to deploy Google’s tensor processing units (TPUs) in its 2027 data centers, signaling growing competitive pressure on Nvidia’s AI accelerators. Alphabet shares rose 2.4%, while Asian suppliers gained sharply, including an 18% jump in South Korea’s IsuPetasys and nearly 5% in Taiwan’s MediaTek. Google’s prior agreement to supply up to 1 million TPUs to Anthropic helped validate TPUs as a credible alternative to Nvidia’s GPUs, prompting broader industry interest.

Bloomberg Intelligence estimates Meta’s 2026 capex at a minimum of $100 billion, with $40–50 billion likely dedicated to inferencing-chip capacity, implying demand for Google Cloud’s TPUs and Gemini models could grow faster than for rival hyperscalers. Meta may also rent TPUs from Google next year. These developments reflect rising efforts to reduce dependence on Nvidia amid global concerns about concentrated AI-compute supply chains. Adoption by both Anthropic and potentially Meta strengthens TPUs’ position in training and inference markets.

Google’s Ironwood TPU, introduced in 2025, is an application-specific integrated circuit rather than a general-purpose GPU, giving it advantages in efficiency and customization. TPUs have been adapted to accelerate AI and machine-learning workloads and benefit from close iteration loops between Google’s chip designers and its DeepMind and Gemini model teams. As efficiency, compute density, and customization become higher priorities for enterprises, TPU deployment outside Google is accelerating, creating sustained competitive pressure for Nvidia.