越来越多研究表明,不同类型的 AI 模型正在收敛到相似的内部表示方式。尽管语言模型、视觉模型等通常使用完全不同的数据进行训练,研究发现它们在表征概念时会形成结构相近的表示,且这种相似性随着模型能力提升而增强。2024 年,麻省理工学院的四名研究者系统提出“柏拉图式表示假说”,认为这种收敛并非偶然,而是源于模型共同面对同一个现实世界的数据投影。该假说引发了显著分歧,研究者对其合理性和可验证性尚未形成共识。

从技术角度看,AI 的表示本质上是高维数值结构。单个神经网络层往往包含数千个神经元,其激活状态可表示为高维向量。向量方向的相似性用于量化不同输入在模型中的语义接近程度:在同一模型内,相似概念对应的向量距离更近。由于不同模型的向量空间不可直接对齐,研究者转而比较“相似性的相似性”,即考察不同模型中概念之间的相对结构是否一致。

具体方法通常选取一组输入(如多种动物名称),分别输入两个模型,得到各自的一组向量簇,再比较这些簇的整体几何形状。若两组簇在结构上高度相似,则说明模型对概念关系的编码方式接近。这类分析支持了跨模型收敛的经验结果,但也引发方法论争议,例如应选择哪些层、哪些输入作为代表。整体来看,数值表示的高维特性与不断扩大的模型规模,使这一收敛现象在统计上越来越显著,但其哲学含义仍在辩论之中。

A growing body of research suggests that distinct types of AI models are converging on similar internal representations. Although language and vision models are trained on entirely different data, studies find that they encode concepts in structurally similar ways, with similarity increasing as models become more capable. In 2024, four researchers at MIT formalized this pattern as the “Platonic representation hypothesis,” arguing that convergence reflects shared exposure to the same underlying world. The proposal sparked sharp disagreement, with no consensus yet on its validity or testability.

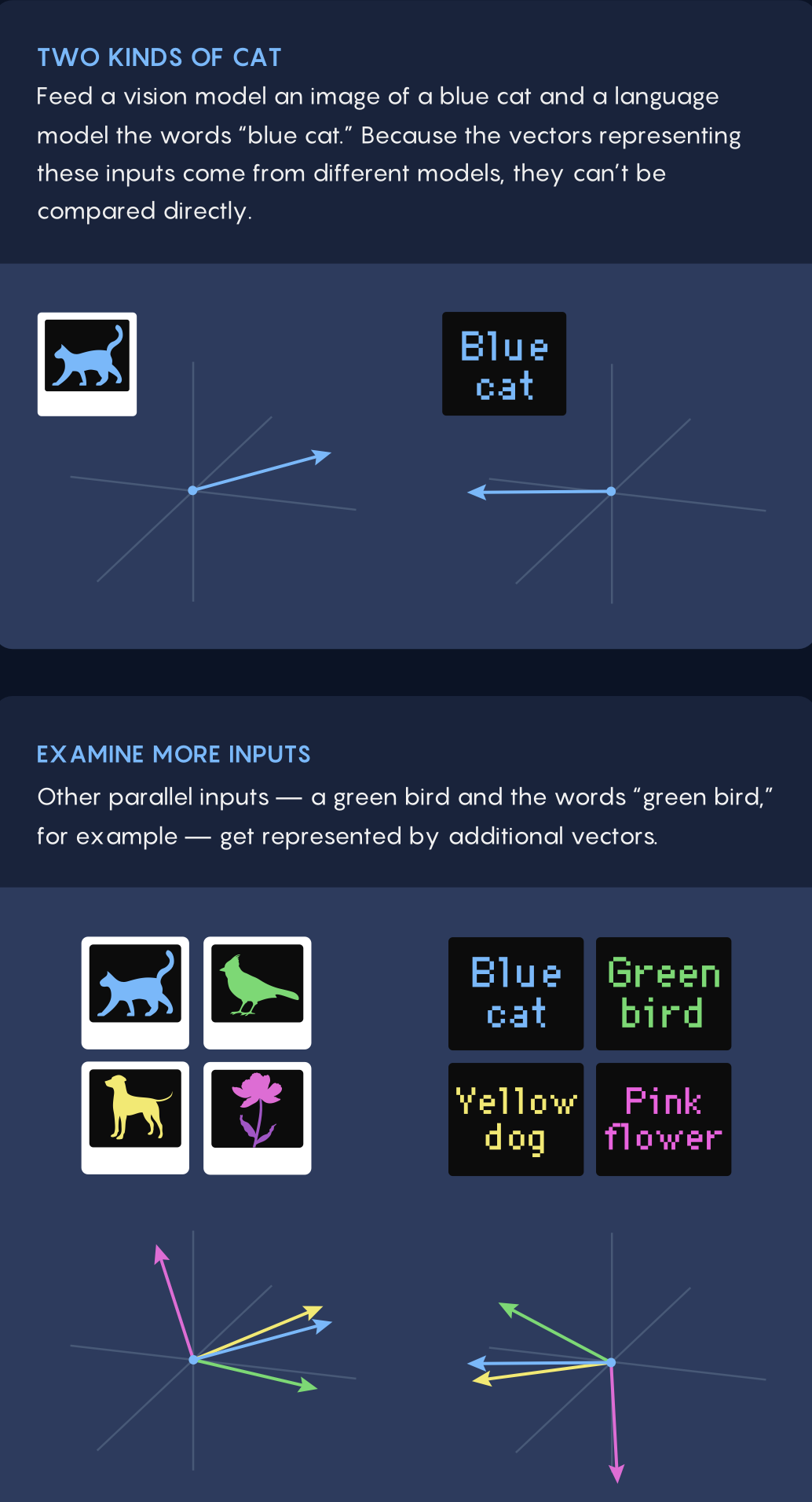

Technically, AI representations are high-dimensional numerical structures. A single neural network layer often contains thousands of neurons, whose activations form a vector in a high-dimensional space. Similarity between vectors is used to quantify semantic closeness: within one model, related concepts correspond to nearby vectors. Because vector spaces from different models cannot be directly aligned, researchers instead compare higher-order structure by examining whether relationships among concepts are preserved across models.

A common method selects a set of inputs, such as animal names, feeds them into two models, and analyzes the resulting vector clusters. Researchers then compare the global geometry of these clusters rather than individual vectors. High structural similarity indicates that the models encode conceptual relationships in comparable ways. While such analyses provide quantitative evidence of convergence, debates remain over which layers and inputs are representative. As model size and dimensionality continue to grow, statistical signals of convergence strengthen, even as their deeper interpretation remains contested.